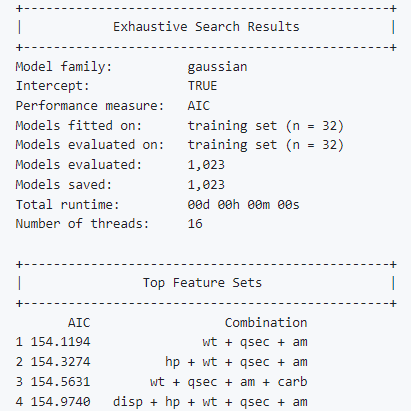

Exhaustive Search

R Package

A high-performance implementation of an exhaustive feature selection in R.

Motivation

Finding the optimal set of features for a prediction model is a widely studied problem. Multiple so-called suboptimal feature-selection techniques exist, which try to navigate through the feature space in an intelligent way to come up with a predictive subset of features.

The main reason these algorithms exist is that an optimal feature selection (ie. evaluating all combinations) is typically infeasible. This R package tries to broaden the horizon on that a little bit. With an efficient implementation and suitable set of model and evaluation settings, billions of feature sets can be evaluated in a reasonable time.

Development

For the best performance, I needed to use a fast programming language. I chose C++, as it nicely interacts with R. Another motivation was to learn a little bit more about this advanced programming language. I wanted to implement the simple OLS regression, but also Logistic Regression, which turned out to be a bit of a challenge.

The development had a lot of smaller and bigger challenges:

Memory management

Dealing with these orders of magnitude makes the most ordinary things difficult. For instance, just looping over all combinations to evaluate them is not trivial, as you cannot just compute all combinations first and then access them by index. So I needed a function that gives me the i-th combination of N over k total combinations by index.

Multithreading in C++

For better performance, we need parallelization. In C++ more efficiently threading. Splitting stuff up into batches makes the problems of memory management even more complicated.

Fitting a Logistic Regression

Contrary to OLS, the Logistic Regression has no closed form. So I needed a numeric optimizer to compute it. I found a C port of the FORTRAN implementation of the L-BFGS method, which I got to run in my C++ code. I tested the results and it matched what I got in R up to the last decimal point.

Results

The final R package was accepted in CRAN and can be simply installed:

install.packages("ExhaustiveSearch")A local benchmark on an AMD Ryzen 7 1700X on a data set of 500 observations resulted in the following performances:

Linear Regression: 45000 models/threadsec

Logistic Regression: 2500 models/threadsecOn a typical home-PC setup (16 threads), one could expect to evaluate 2,592,000,000 linear regression models per hour.